- Published on

Exploring Dify - The Next Generation AI Application Development Platform

- Authors

- Name

- caphe.dev

- @caphe_dev

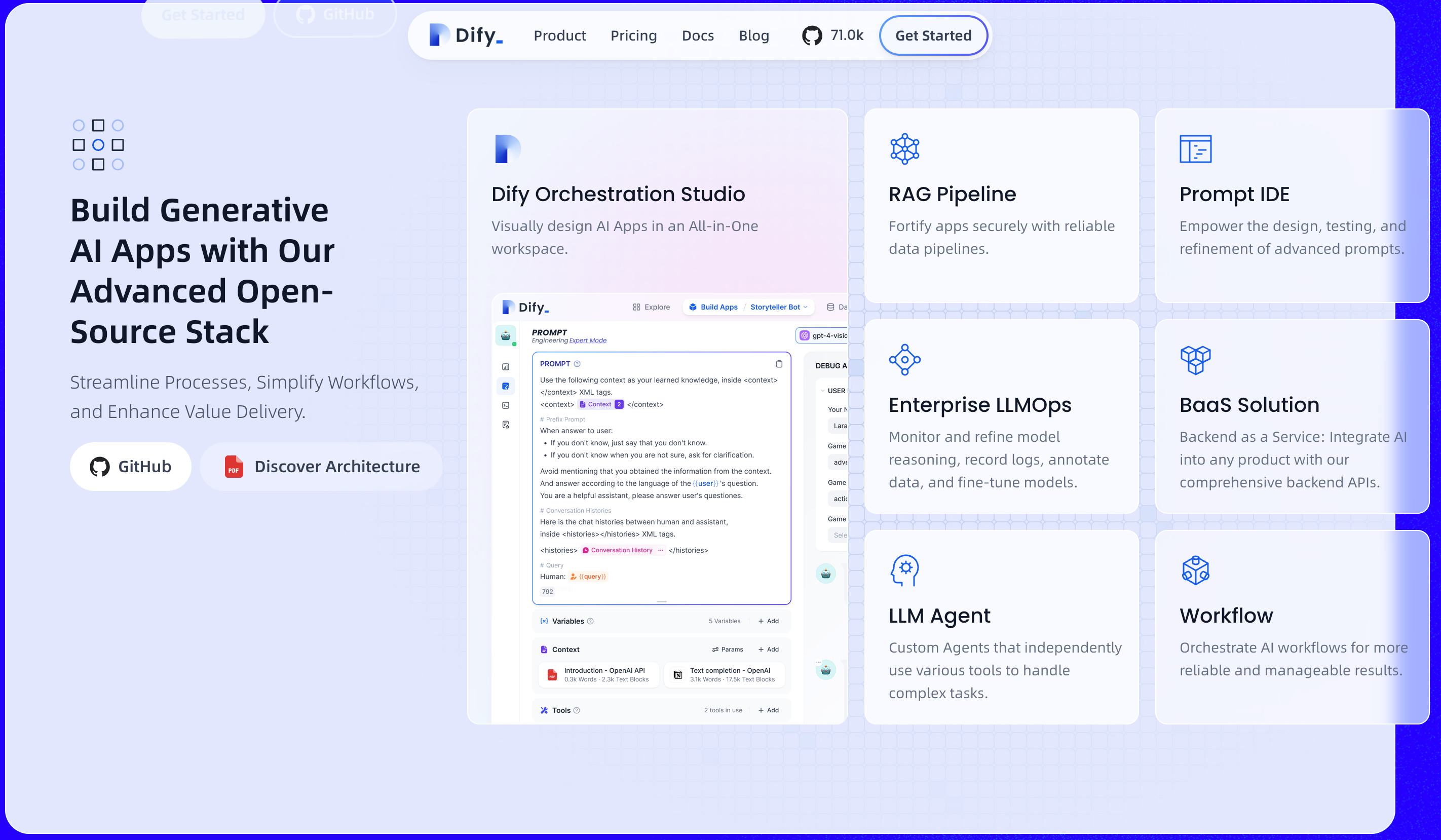

In the world of artificial intelligence (AI) application development, Dify has emerged as a powerful open-source solution that simplifies the process of building and operating systems based on Large Language Models (LLMs). With over 10,000 forks on GitHub and a vibrant developer community, Dify is reshaping how businesses and developers approach AI technology[1][7]. This article will delve into the architecture, unique features, and practical applications of this platform.

Overview of Dify Architecture

The Dify ecosystem is built on a modern microservices architecture, utilizing Docker and Kubernetes to deploy independent components. The core codebase is written in Python (Flask) for the backend and TypeScript for the frontend, ensuring high performance and scalability[6][11].

Dify's CI/CD process is represented through a GitHub Actions workflow optimized for both AMD64 and ARM64 architectures. The system automatically builds Docker images for API and Web components, manages intelligent caching through GitHub Actions, and deploys multi-architecture images to Docker Hub[1][2]. This mechanism allows for continuous updates while maintaining system stability.

Dify's security model is reinforced through Dify-Sandbox - a safe code execution environment that uses libseccomp and resource isolation mechanisms. This solution is particularly important when handling high-risk AI tasks such as web scraping or processing sensitive data[9].

Breakthrough Features

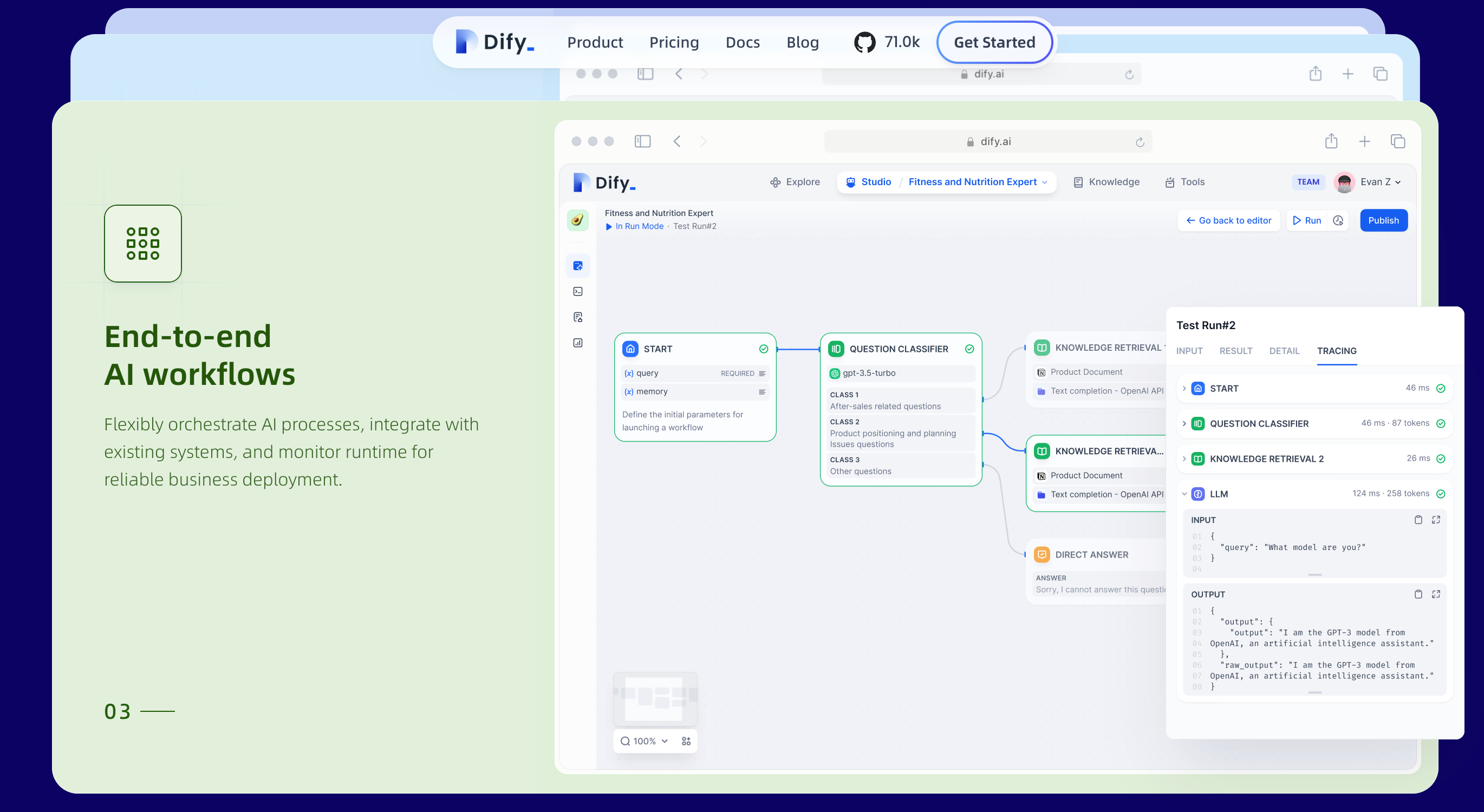

1. Visual Workflow System

Dify's drag-and-drop interface allows for the design of complex AI workflows without the need to write code. Users can combine data processing nodes, AI models, and external tools into a complete pipeline. A typical example is the workflow for cross-platform content processing that automates the data collection, analysis, and style adjustment for each social media channel[8].

2. Multi-Model LLM Support

Dify integrates over 50 top AI models from providers such as OpenAI, Anthropic, Llama, and various open-source frameworks. Its strength lies in the ability to manage model versions, conduct A/B testing, and automatically switch between models based on performance[6][10]. The Model Runtime mechanism allows for the deployment of custom models without affecting the main system.

3. Advanced RAG Tool

Dify's Retrieval-Augmented Generation (RAG) system supports processing over 20 different document formats, from PDFs to design files. The optimized vector embedding algorithm enhances accuracy in information retrieval, particularly effective when working with large datasets[6][8].

4. Intelligent Agent Ecosystem

Dify provides over 50 built-in tools for AI agents, from Google Search to DALL-E. The Function Calling feature allows agents to automatically select the appropriate tool based on context, while the ReAct mechanism helps handle complex multi-step tasks[6][10].

Practical Applications in Business

Content Marketing Automation

A typical case study is the cross-platform content processing workflow. The system automatically collects data from the company blog, analyzes it using NLP, adjusts the style for each channel (Facebook, Instagram, Twitter), and suggests appropriate images. This process saves 70% of content production time compared to traditional methods[8].

Intelligent Customer Support

Dify enables the creation of chatbots capable of retrieving information from an internal knowledge base, handling complex queries about products/policies. The annotation reply feature improves answer accuracy by 40% while reducing LLM token costs by 30%[10].

Automated Data Analysis

With a workflow that integrates SQL query generation and data visualization, Dify allows for the creation of dynamic reports from raw data. The system can automatically identify patterns, detect anomalies, and suggest actions for managers[10].

Comparison with Competing Solutions

The comparison table below illustrates Dify's advantages in the LLMOps ecosystem:

| Criteria | Dify | LangChain | OpenAI Assistants |

|---|---|---|---|

| Model Deployment | Multi-cloud | Local/Cloud | Cloud-only |

| Workflow Management | Visual Interface | Code-based | Limited |

| Tool Integration | 50+ Built-in | Custom Development | Basic |

| Performance Monitoring | Real-time Metrics | Third-party Tools | Limited |

| Security Model | Sandbox Environment | Basic | Enterprise-grade |

Dify stands out with its low-code approach, suitable for both technical and non-technical teams. The ability to publish workflows as APIs facilitates easy integration into existing systems[6][10].

Basic Deployment Guide

To install Dify on Windows 11:

- Prepare the Environment:

- Install Docker Desktop and Git

- Sign up for a free Docker Hub account

- Deploy the System:

git clone <https://github.com/langgenius/dify.git>

cd dify/docker

docker compose up -d

- Access the Interface:

- Open a browser and go to

http://localhost/apps - Register an admin account and start designing your first AI application[12].

- Open a browser and go to

The cloud-native deployment process using Kubernetes can scale up to thousands of requests per second thanks to integrated auto-scaling and load balancing mechanisms.

Future Development Trends

The upcoming version of Dify promises to deliver:

- Support for multi-modal AI (image/video processing)

- Blockchain integration for immutable logging systems

- An AI governance framework that meets international standards

- A marketplace for custom workflows and models[10]

These improvements position Dify as an all-in-one platform for developing enterprise-grade AI applications.

Conclusion

Dify is not just a tool but a complete ecosystem for developing next-generation AI applications. With an open architecture, strong community support, and a clear development roadmap, this platform is becoming the top choice for both startups and large enterprises. The ability to shorten the time from prototype to production from months to days is a significant advantage that helps Dify shape the future of intelligent application development.

Sources

- [Dify.AI · The Innovation Engine for Generative AI Applications](https://dify.ai/)

- [Dify.AI (@dify_ai) / X](https://x.com/dify_ai?lang=vi)