- Published on

Open WebUI: A Powerful Self-Hosted AI Platform for Businesses and Developers

- Authors

- Name

- caphe.dev

- @caphe_dev

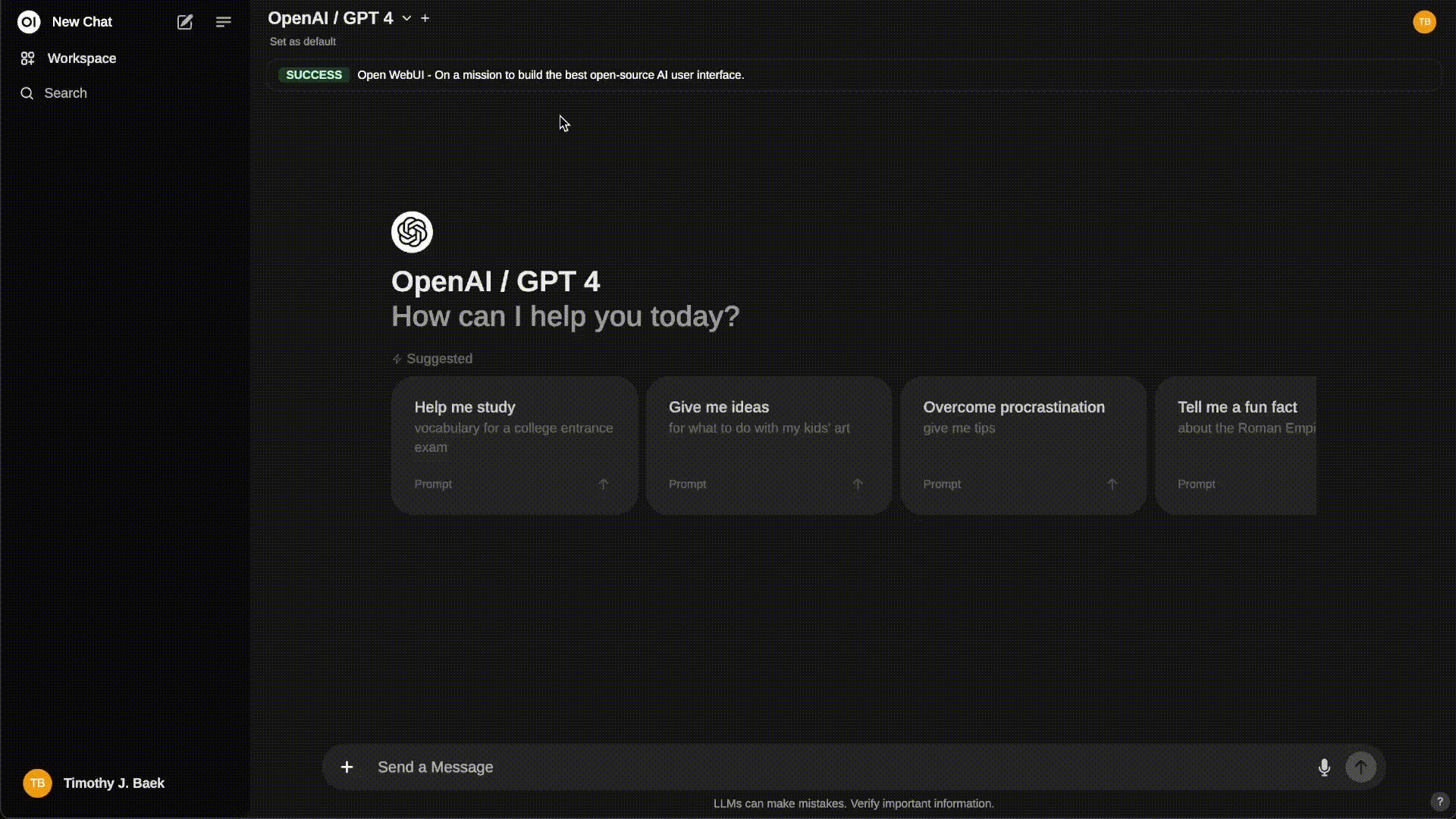

Open WebUI has quickly become one of the leading solutions for local AI deployment, providing a powerful self-hosted web interface with over 77,700 stars on GitHub[1][2]. This open-source project not only supports large language models (LLMs) through Ollama but also seamlessly integrates with OpenAI APIs, offering unprecedented flexibility for both businesses and developers[3][5]. With the ability to operate entirely offline and a scalable architecture, Open WebUI is reshaping how we interact with AI in local environments.

Architecture and How It Works

Modern Client-Server Model

Open WebUI is built on an optimized client-server architecture, combining a high-performance Svelte frontend with a robust FastAPI backend[7]. The system uses WebSocket to handle real-time data streams, allowing for a smooth chat experience similar to ChatGPT. The RAG (Retrieval-Augmented Generation) mechanism is deeply integrated through Chroma DB, enhancing response capabilities by referencing internal data[9].

Example of processing a query flow:

- The user enters a question through the web interface

- The frontend sends a request to the backend via REST API/WebSocket

- The RAG system queries Chroma DB for relevant context

- The LLM (Ollama or OpenAI API) processes the information and generates a response

- The result is streamed back to the client via WebSocket

Multi-Platform Support

The compatibility of Open WebUI is demonstrated by its support for both CPU and GPU through Docker[8]. For NVIDIA systems, simply add the flag --gpus all to enable acceleration. The containerized architecture allows deployment on any platform from Raspberry Pi to enterprise servers, with optimal resource consumption.

Deployment and Management

Step-by-Step Installation with Docker

The deployment process is maximally simplified through Docker. For GPU-enabled systems:

docker run -d -p 3000:8080 --gpus all \\

-v ollama:/root/.ollama \\

-v open-webui:/app/backend/data \\

--name open-webui \\

--restart always \\

ghcr.io/open-webui/open-webui:cuda

The CPU-only version is even lighter, requiring a minimum of 2GB RAM[4]. The volume system is designed to preserve data across updates, ensuring continuity in operations.

Version Management and Updates

Open WebUI integrates Watchtower for automatic updates:

docker run -d --name watchtower \\

--restart unless-stopped \\

-v /var/run/docker.sock:/var/run/docker.sock \\

containrrr/watchtower --interval 300 open-webui

The rolling update mechanism ensures downtime is nearly zero, making it extremely suitable for production systems[8].

Breakthrough Features for Enterprises

Multi-Source LLM Integration

Not limited to Ollama, Open WebUI supports all OpenAI-compatible APIs such as Groq, Claude, or Mistral[3][5]. Adding a new model configuration takes just a few clicks:

- Access Settings > Model

- Add API endpoint

- Name and configure parameters

- Save and test the connection

Detailed User Management System

The RBAC (Role-Based Access Control) feature allows permissions to be assigned to individual functions[5][9]. Businesses can create user groups with specific privileges:

- Admin: Full system configuration rights

- Developer: Create/tweak models

- User: Only use approved models

- Auditor: View activity logs

Enhanced RAG with Enterprise Customization

The RAG mechanism in Open WebUI allows embedding internal documents into the processing workflow[10]. For example, uploading a financial report PDF for AI analysis:

- Create a new collection in RAG

- Upload PDF/Word files

- Set up metadata and tags

- Link the collection with the model

Result: When asked about business metrics, the AI automatically references the uploaded documents to provide accurate answers.

Security and Compliance

End-to-End Encryption

All data is encrypted with AES-256 when stored[7]. Open WebUI supports Vault integration for key management, suitable for stringent compliance requirements such as GDPR or HIPAA.

Physical Access Control

With the ability to run entirely offline, businesses can deploy Open WebUI on an internal network disconnected from the Internet[4][5]. The air-gapped deployment feature ensures sensitive data never leaves the server room.

Development and Expansion

Plugin Ecosystem

The modular architecture allows for new features to be added via plugins[7]. For example, creating a plugin for image processing:

- Initialize the plugin directory

- Define a new API endpoint

- Implement image processing logic

- Package and install via the admin panel

CI/CD Integration

Open WebUI supports a full API for integration into DevOps pipelines[8]. Sample script for deploying a new model via GitLab CI:

deploy_model:

stage: deploy

script:

- curl -X POST "${OPENWEBUI_URL}/api/models"

-H "Authorization: Bearer ${API_KEY}"

-F "model=@${MODEL_FILE}"

-F "config=@${CONFIG_FILE}"

Trends and Future

Edge AI and IoT

The ability to run on edge devices like Raspberry Pi opens up applications in the IoT field[10]. For example, deploying a smart security monitoring system:

- Cameras integrated with Open WebUI

- Local image processing via LLM

- Real-time alerts without needing the cloud

Multi-Modal Support

The 2025 roadmap for Open WebUI promises additional support for real-time video processing and AR[7]. This will revolutionize the fields of telemedicine and remote assistance.

Conclusion

Open WebUI is not just a web interface but a complete self-hosted AI ecosystem. With over 230 contributors and a community of 156,000 users[6][8], the project is shaping the future of decentralized AI. Businesses can start with the free community version and later upgrade to enterprise for SLA support and advanced features like custom branding[5][8].

The trend of self-hosted AI is rising amid concerns about privacy and cloud costs. Open WebUI is the key for businesses to take control of AI technology, optimizing processes while ensuring data safety.

Sources