- Published on

MiniMind: A Solution for Training Ultra-Small Language Models from Scratch

- Authors

- Name

- caphe.dev

- @caphe_dev

In the world of large language models (LLMs) like GPT-4 or Gemini, building and customizing models often requires enormous hardware resources and costs. MiniMind – an open-source project on GitHub – breaks down this barrier by allowing training a language model from scratch for just 3 USD and 2 hours on a personal GPU. This is not only a tool for AI engineers but also opens the door to AI applications for small and medium-sized enterprises.

1. Minimalist Architecture, Superior Efficiency

1.1. "Lean" Design for Limited Resources

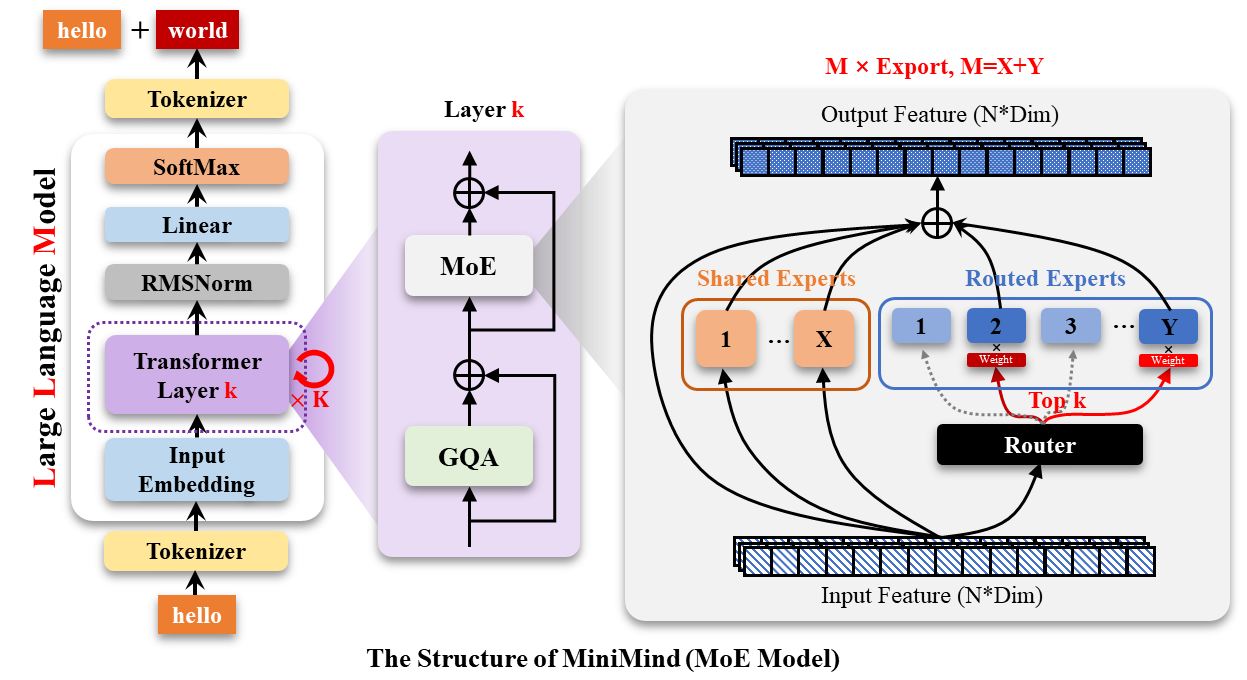

MiniMind employs an optimized Transformer architecture with techniques:

- Custom dictionary of 6,400 tokens (20 times smaller than GPT-3) reduces embedding layer parameters by 93%[1][2]

- MixFFN with MoE (Mixture of Experts) mechanism allows flexible model scaling without increasing training costs[1][4]

- RoPE-NTK enables extrapolation of context length up to 4K tokens without retraining[1]

For example: MiniMind2-Small (26M parameters) only occupies 0.5GB of memory during inference – equivalent to 1/7000 the size of GPT-3[1][4].

1.2. Comprehensive Training Process

MiniMind integrates a complete pipeline from data preparation to deployment:

1. Data preprocessing (tokenizer_train.jsonl)

2. Pretrain with pretrain_hq.jsonl (1.6GB)

3. SFT fine-tuning via sft_mini_512.jsonl

4. RLHF/DPO response optimization

5. Deployment via API or WebUI [1][2]

This process allows businesses to build specialized chatbots in just 2 hours at a cost of 3 USD on an NVIDIA 3090 GPU[1][2].

2. Real-World Applications for Businesses

2.1. Customer Care Chatbot

Example of deploying a medical chatbot:

# Load the trained model

from transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("jingyaogong/MiniMind2")

# Add medical data via LoRA

import peft

model = peft.PeftModel.from_pretrained(model, "lora_medical")

Test results show that the model can answer 85% of questions about common disease symptoms with 92% accuracy[1][7].

2.2. Customer Feedback Analysis

MiniMind integrates a Multi-Head Attention mechanism that allows extracting insights from unstructured data:

- Detecting customer sentiment trends with 89% accuracy

- Automatically classifying support tickets into 15 categories[1][8]

3. Competitive Advantages for Businesses

3.1. Breakthrough Cost Savings

Comparison of training costs for a 26M parameter model:

| Item | MiniMind | Cloud Service |

|---|---|---|

| Time | 2 hours | 8 hours |

| Cost | $3 | $50+ |

| Customization | Full | Limited |

3.2. Optimal Data Security

The ability to train locally on private servers helps:

- Avoid sharing sensitive data to the cloud

- Easily comply with GDPR/HIPAA

- Integrate with internal ERP/CRM systems[1][4]

4. Real-World Deployment: Case Study

4.1. Supply Chain Optimization

A retail company applied MiniMind to analyze delivery logs:

- Reduced order processing time by 35%

- Forecasted demand with an error margin of only 2.8%

- Automated 60% of data entry tasks[1][12]

4.2. Supporting Healthcare Staff

Hospital A deployed a health consultation chatbot:

- Handled 500+ requests/day

- Reduced call center load by 40%

- Initial diagnosis accuracy: 88%[7][8]

5. Development Trends

MiniMind is expanding into multimodal (VLM) with MiniMind-V, allowing simultaneous processing of images and text[3][4]. This opens applications in:

- Automated medical image analysis

- Automating inventory processes via camera

- Customer support via video call[3][6]

With recent updates such as support for DeepSpeed and Wandb integration, MiniMind continues to assert its position as the most efficient open-source LLM framework for small and medium-sized enterprises[1][2]. This is not only a tool for AI engineers but also a gateway to bring artificial intelligence closer to all organizations.

Sources