- Published on

Ollama, LM Studio and LLM Gen AI Applications Running on Personal Computers

- Authors

- Name

- caphe.dev

- @caphe_dev

In the rapidly evolving world of artificial intelligence, running large language models (LLM) on personal computers has become a new trend thanks to tools like Ollama and LM Studio. These solutions not only provide flexibility in usage but also ensure absolute data security[3][6]. This article will take you from basic concepts to detailed practical guidance, while exploring the real-world applications of local LLMs in everyday life.

The Nature of Local LLMs and Why You Should Care

Definition of Local LLM

A local large language model (Local LLM) is an AI version that runs directly on personal hardware without needing an internet connection[3][6]. Unlike ChatGPT or Claude, which require cloud connectivity, these LLMs operate independently, similar to Microsoft Word compared to Google Docs - providing complete autonomy for users.

The core advantage lies in controlling input/output data: All sensitive information such as medical records, financial data, or business contracts is processed internally[3][6]. This is particularly important for businesses that need to comply with GDPR or HIPAA.

Comparison of Local LLM and Cloud Services

The analysis table below clarifies the differences:

| Criteria | Local LLM | Cloud Services |

|---|---|---|

| Security | Data stored locally | Risk of data leakage |

| Cost | One-time hardware investment | Monthly subscription fees |

| Speed | Depends on configuration | Stable but has latency |

| Customization | High, model can be modified | Limited by provider |

| Internet Requirement | Not mandatory | Mandatory |

Research from HocCodeAI indicates that 73% of small and medium enterprises are switching to local LLMs to reduce operational costs by 40% annually[3]. Especially for local language processing tasks like Vietnamese, local LLMs allow for vocabulary and context customization that is more appropriate[8].

Popular Local LLM Tool Ecosystem

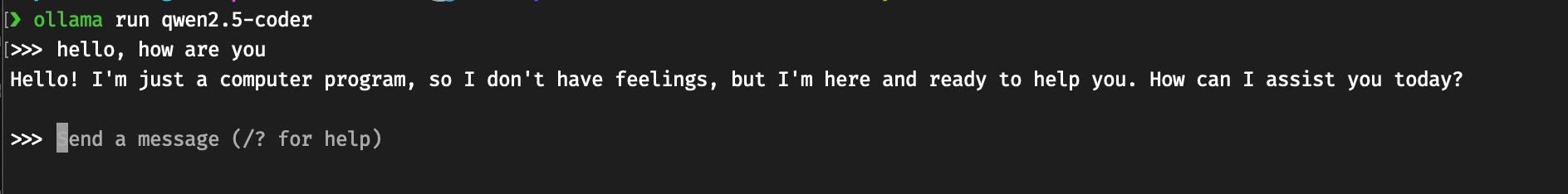

Ollama - The "Docker" for the AI World

Ollama stands out as an open-source platform that allows for managing and deploying LLMs through a simple command-line interface[1][11]. The system automatically optimizes for available hardware, from high-end GPUs to standard CPUs.

Typical workflow:

- Download the model using the command

ollama pull llama3.1 - Launch with

ollama run llama3.1 - Interact directly through the terminal or integrate with GUI like Open WebUI[4]

The main advantage of Ollama lies in its ability to create custom models via Modelfile, similar to Dockerfile[11]. For example, creating a travel chatbot:

FROM llama3.1

PARAMETER temperature 1

SYSTEM "You are the virtual travel assistant for Vietnam Airlines"

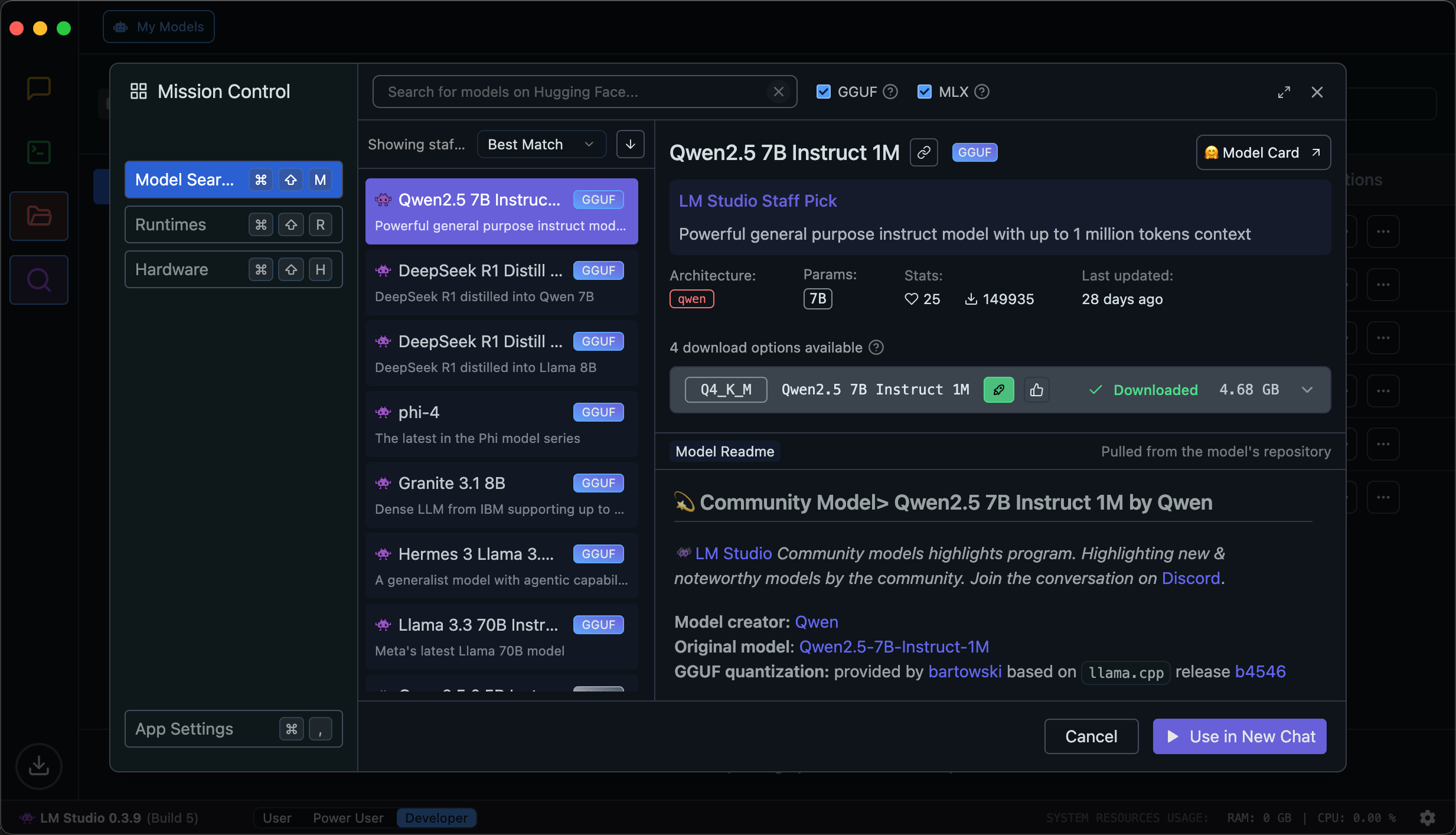

LM Studio - The AI Store on Your Computer

If Ollama is developer-oriented, LM Studio offers an "all-in-one" experience with an intuitive interface[2][8]. This tool integrates a model repository from Hugging Face, supporting Vietnamese models like PhoGPT.

Basic usage process:

- Download the model through the search interface

- Select a preset configuration suitable for the hardware

- Interact through the chat window or local API[10]

The strength of LM Studio is its ability to run a local server, turning the computer into a personal AI hub. Users can integrate into applications via endpoints like /v1/chat/completions compatible with OpenAI API[10][14].

Optimal Hardware Configuration for Local LLM

Minimum Requirements

According to recommendations from HocCodeAI[3]:

- GPU: NVIDIA RTX 3060 (8GB VRAM) or higher

- RAM: 16GB for 7B parameters model

- Storage: SSD 256GB or higher

For larger models like Llama3.2 70B:

- VRAM requirement: 24GB+

- Recommended RAM: 64GB

- Minimum memory bandwidth: 400GB/s

Hardware Optimization

Experiments from TechMaster show how to increase performance:

- Enable NVIDIA CUDA in driver settings

- Use 4-bit quantization model format

- Apply offloading techniques to distribute load between CPU/GPU[2]

- Configure swap memory on Linux when lacking VRAM

Example command to launch with LM Studio:

model = AutoModelForCausalLM.from_pretrained(

"mistral-7b",

load_in_4bit=True,

device_map="auto"

)

Real-World Applications for General Users

Versatile Virtual Assistant

Local LLMs can become:

- Writing assistant: Drafting emails, blog posts

- Financial advisor: Analyzing expenses, suggesting investments

- AI tutor: Explaining scientific concepts[12]

Example with Ollama:

ollama run gemma:7b "Please write a formal thank-you letter to the customer in Vietnamese"

Processing Internal Documents

The RAG (Retrieval-Augmented Generation) feature allows:

- Summarizing reports from PDF/Word files

- Analyzing legal contracts

- Automatically generating FAQs from training documents

Implementation with LlamaIndex:

from llama_index import VectorStoreIndex

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

response = query_engine.query("Summarize the security terms")

Developing AIoT Applications

Combining local LLMs with IoT devices:

- Smart home systems controlled by voice

- Service robots using vision models

- Intelligent security monitoring systems

Example integrating LM Studio with Raspberry Pi:

import requests

response = requests.post(

"<http://localhost:1234/v1/chat/completions>",

json={"model": "llama3.1", "messages": [...]}

)

Trends and Development Forecast

According to a report from Intel[5], the local LLM market is expected to grow at a CAGR of 45% from 2025 to 2030. Notable trends include:

- Multimodal models processing images/sounds[13]

- LoRA techniques enabling effective fine-tuning[6]

- Integration of neural engines on next-generation CPUs

- Support for context token limits up to 1M

Open-source projects like GPT4All are revolutionizing running LLMs on Raspberry Pi with optimized performance[9]. This opens up the potential for AI applications on all devices from smartphones to embedded systems.

Conclusion and Recommendations

The journey of exploring local LLMs through Ollama and LM Studio has revealed the immense potential of this technology. For general users, getting started can be as simple as:

- Experimenting with a 7B parameters model on a personal laptop

- Integrating into daily document processing workflows

- Building small domain-specific chatbots

Organizations should consider investing in:

- Dedicated hardware infrastructure

- Training personnel to operate LLMs

- Developing custom models tailored to business needs

The future of local LLMs promises to blur the lines between cloud AI and personal devices, ushering in a new generation of intelligent applications - where privacy and processing power coexist.

Sources